2024-05-01

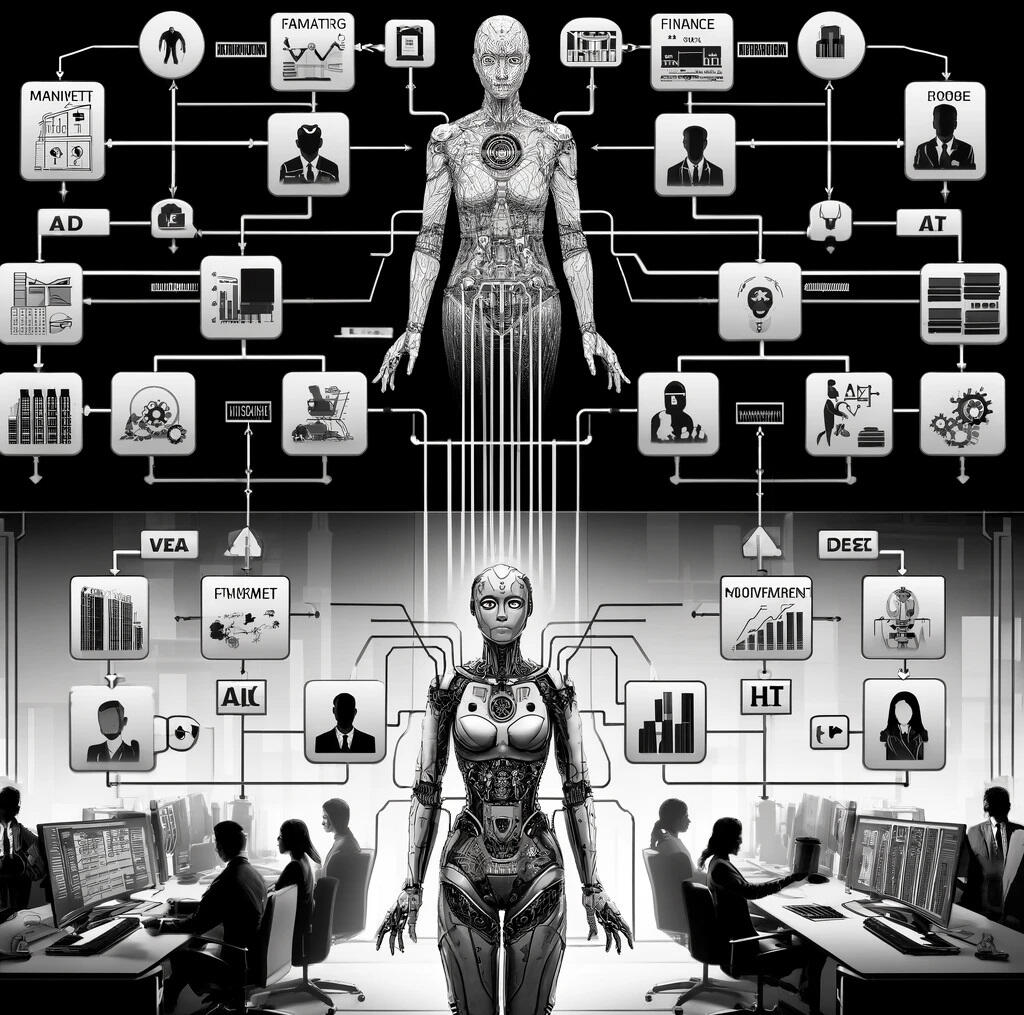

Impending AI transformationsAs we edge closer to a future dominated by AI agents in the workforce, the traditional structures of organisational information flow — both vertical and horizontal — are up for a transformation. In typical human-centric workplaces, information cascades from executives to individual contributors (and vice-versa) and spans across departments to foster collaboration. This flow, mediated by human judgment, ensures that information is (usually) shared with relevance and sensitivity, respecting organisational hierarchies.The Shift to an AI Workforce

However, the integration of AI agents as primary workforce members introduces some complex challenges and questions. With AI supplementing human roles, the established norms of hierarchy and departmental boundaries warrant reevaluation. We should really question whether AI should mimic human-like information flows and hierarchies and, if so, how AI can autonomously manage the nuances of sensitive or relevant information exchanges.A concrete example.

(Quick primer for those less familiar with GenAI/RAG Applications)

An AI workforce is powered by organisational context. Generally we call these RAG GenAI applications, and all this means (for the layman) is that the Large Language Model is provided with organisational specific data to help it formulate responses that would otherwise not be possible. For simple applications this could be a collection of documents (like press releases), for more forward thinking applications the context would span every single piece of information within an organisation (every email, every slack message, every slide and memo, you get the gist)

(end primer)Your company now has an AI agent that supports your legal department. Let's call him/her/it Aggie. Aggie needs access to a broad spectrum of data in order to fulfill her duties. Senior legal partners utilize Aggie, by sending her emails to do work (such as generating T&Cs, or reviewing a query from a 3rd party), and Aggie completes these tasks seamlessly, having access to both memos between partners, but also robust documentation of the company, software, processes and so-forth.At the same time, you have a separate AI agent that supports your marketing department. Call him Mark. Mark needs access to some of the same documents that Aggie has (e.g. press releases, to ensure his comms are aligned with previous company announcements). But Mark doesn't need access to a memo sent between the senior legal partner to a director that our friend AI-Aggie would need.The solution to this problem isn't trivial. From a tech standpoint: You can't (or shouldn't) be building dedicated information lakes for each Agent. Every time you need to add another AI-agent, that would exponentially increase time to deployment (i.e. development cost), and tech debt. From an operational standpoint: who governs accesses for Mark and Aggie?A Much-Needed Framework

To address these challenges, the implementation of sophisticated access frameworks becomes essential. How I envision it functioning: all information is fed into an organisation’s (vectorized) information/data lake, and is tagged with comprehensive metadata. This includes details like the information’s source, scope, author, intended audience, and the level of seniority it pertains to, allowing AI agents to effectively navigate, retrieve, and interact with data pertinent to their roles.In an ideal scenario, when 'onboarding' the AI agent, you would simply tick which 'lakes' the agent can and can't access. Lakes would exist at both horizontal (e.g. by department) and vertical (e.g. by seniority) levels. As opposed to by 'use-case' which is terribly short-sighted.Whether you'd enforce this through separate mini data lakes, OR let the LLM infer it is something I haven't solved for yet. Though is a fun thought exercise. The former option provides more security (ensures data doesn't leave a domain it's not supposed to) but still ultimately relies on humans to configure (and therefore has the potential to be fraught with gatekeeping and siloed agendas).What's intriguing to me is that this problem only exists in a hybrid human/AI workforce. If the workforce was entirely agent-based, the needs for these controls would dissipate.AI in the Workforce: An Inevitable TransitionOrganisations must embrace AI to stay competitive—this is obvious. Going beyond basic 'AI literacy' or simple tasks like drafting sales pitches through tools like GPT, Gemini, or MetaAI, companies not working towards an agent-powered workforce are, in my humble opinion, merely treading water.Autonomous agents are now a tangible reality, capable of directly addressing queries that would traditionally wind through a hierarchy to burden some poor data analyst with an ever-increasing list of requests. But I can assure you basic insights are not all these agents can do. They can manage complex negotiations with precise calculations to offer reasoned estimates. They can schedule meetings in calendars given conflicting meetings. They can pre-emptively determine conflicting/orthogonal OKRs. Essentially, if it can be done on a computer, they can do it.(of course they can also drive, cook, and clean but the capital investment required for those things is beyond the reach of most small companies so I'm not going to cover it here)A small (but visionary) segment of the market recognizes the advantage of a human-like digital workforce that integrates seamlessly with familiar platforms (Email, Slack, Chime, etc) moving beyond the rudimentary functions of AI co-pilots / poorly built GPT-wrappers to truly enhance productivity and decision-making. From my experience this tends to be smaller organisations with very clear data driven strategies.Challenges in Larger Organisations

As we've touched on, for AI agents to thrive, they require access to an exhaustive amount of organisational data—from emails and Slack messages to memos and beyond. JIRA tickets, slides, figma boards, ...the whole shebang. And if you skimp out, you're gauranteed to be out-performed by competitors agents powered by better (more complete) context. Herein lies the biggest challenge for large organisations, and in my opinion what will see smaller competitors across a range of industries start competing at scale and pace: convincing a large org to expose all information is a monumental task, both in terms of scope of the data (number of systems), privacy laws (open question: should emails/messages made using company systems be used to power decision making for the AI workforce?) and departmental alignment (how much data from department X gets shared to agents from department Y). Smaller orgs don't face these challenges and if they do it's at an exponentially smaller scale.Closing thoughts

This pivotal area of AI infrastructure and data access control for autonomous agents remains heavily under-invested.At the chip level, Nvidia (and increasingly AMD) are pulling their weight.At the foundational model level big-tech, and the open-source community, are absolutely pulling their weight, making strides in foundational model progress.That leaves us with the last 2 pillars -- AI-infrastructure and AI-agents, where, quite frankly, I am somewhat dissapointed. I'd like to see fewer tech companies building gimmicky GPT-wrappers, and more effort focused on sophisticated AI infrastructure that is capable of managing nuanced data access for AI agents. I believe this would be achieved via either cloud-agnostic IAM policies for the agents, or a consistent metadata schema such that a company could switch out both agent providers' (and by extension foundational models) and not have to reinvent the Agent access wheel. (Because for this to work, pillars 3 (infra) and 4 (agents) need to be in lock-step).What is the best path to lift-and-shift traditional information sharing mechanisms to an AI workforce?Will small orgs make use of this window to compete at a scale never previously accessible?I don't know but the next 2-5 years will be an enjoyable period of transformation.David Searle

Image generated using ai. Rest of this is 100% my terrible writing,